Summary

AI has helped people with disabilities for years, like reading text aloud or turning speech into words. But as AI grows, we must design it with care. Some tools are fun, but others—like ones that help cross the street—must be safe and clear. People need to know how AI works, when to trust it, and when to double-check. By making AI fair and easy to understand, we build tools that help everyone, not just a few.

Artificial intelligence might feel like the hot new thing—but for folks working in accessibility, AI has been quietly powering essential tools for decades. Whether it’s reading a street sign out loud, helping someone navigate indoors, or turning spoken words into text, inclusive technology has always been one of the proving grounds for AI.

That’s why this conversation—about AI, inclusion, and trust—isn’t just about what we can do. It’s about how we design for real people, especially those whose lives may depend on getting it right.

The Quiet History of AI and Accessibility

When we think of AI today, we picture chatbots or flashy image generators. But if you’ve ever used your phone to read text out of a photo, that’s AI too. Optical Character Recognition (OCR) has been around for decades, and it was first developed with blind users in mind. It’s now baked into everything from receipt scanners to document readers.

OrCam, a tool that attaches to glasses, reads text and signs out loud. Smart speakers like Alexa started as accessibility tools—voice recognition was built and refined by people who needed it to work because of disability. Now it’s mainstream.

We’ve even seen AI support people with dementia by mimicking a loved one’s voice, helping them remember what’s next in their day. Tools like “Be My AI” go beyond basic image recognition to describe the mood, context, and emotional weight of a scene—designed with input from blind users and audio describers.

None of these were built for flash. They were built for function.

Trust Isn’t Optional

The heart of inclusive AI isn’t just in what it does—it’s how much people can trust it. That trust needs to be earned, especially when the stakes are high.

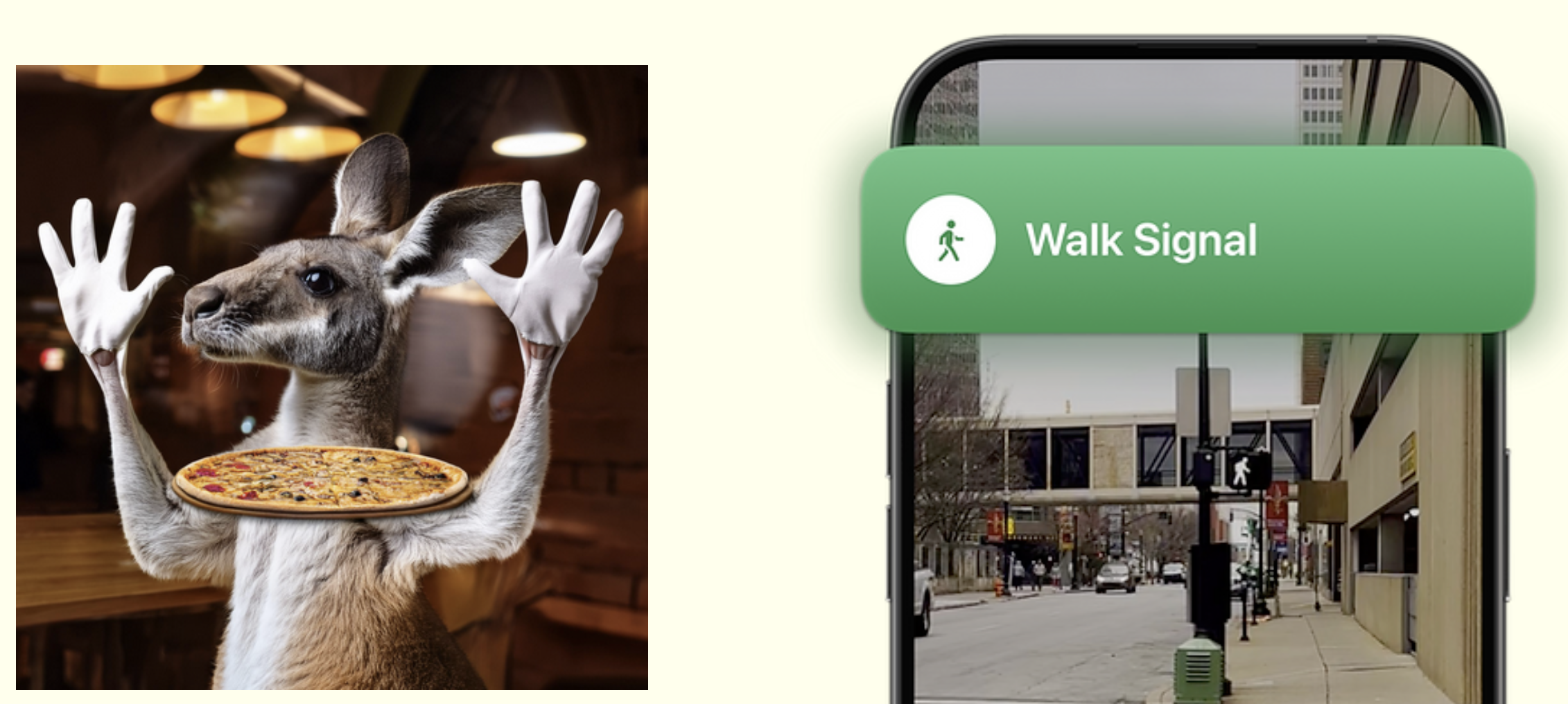

I often show two examples side-by-side. One is a silly AI-generated image of a kangaroo flipping pizza. If it’s weird, who cares? It’s fun.

But then there’s an app like OKO, which uses AI to tell a blind pedestrian when it’s safe to cross the street. If it’s wrong—just once—it could cost a life. Suddenly, a 99.9% success rate isn’t good enough. That’s where inclusive design principles have to step in.

We need to ask ourselves:

- Who gets harmed if this fails?

- How transparent are we being?

- Can users understand and control the system?

- Is there an easy exit when things feel wrong?

These are not technical questions. They are design questions—and ethical ones.

Trauma-Informed Design: A Critical Lens

Trauma-informed design has taught us something important: people need control. They need to know why something is happening and they need to be able to stop it without jumping through hoops.

For example, imagine a person trying to use an app to report domestic abuse. If the app doesn’t offer a quick and quiet exit, it can put that person at even greater risk. That’s not just bad design—it’s dangerous.

Now shift that mindset to business tools. Say someone’s using AI in QuickBooks to migrate 10 years of financial data from desktop to online. If they feel like something’s off, they need the power to pause or restart without losing everything. It’s not just about being efficient—it’s about helping people feel safe.

Explainability Builds Confidence

We’ve all seen news stories about people getting fired or denied loans by AI systems—and having no idea why. That’s the opposite of inclusive design.

Explainable AI (XAI) means showing your work: what data was used, how the system got to its recommendation, and what the person can do next. Tools like Writer (used by Intuit’s content designers) do this well. They show the sources, logic, and summaries step by step.

This kind of transparency helps people feel like they’re working with the system, not being judged by a black box.

The Branding Trap

If the function requires individual trust, it MUST not rely on color.

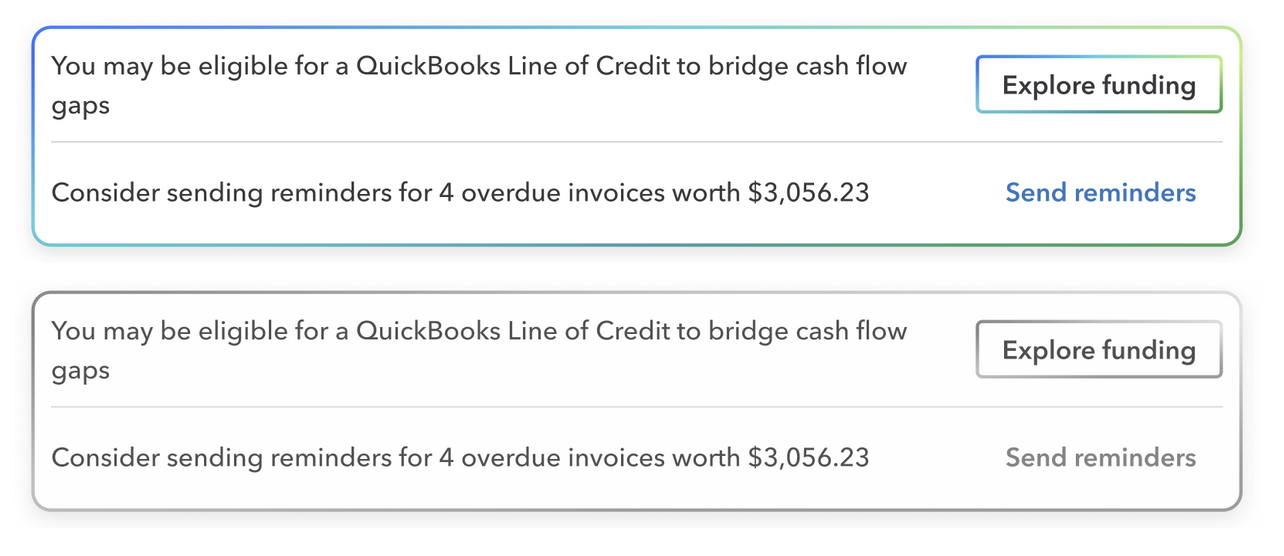

At Intuit, we’ve been exploring ways to label AI-powered features. That might mean gradients, sparkles, or a logo that says “Intuit Assist.” But here’s the big question: when should we highlight that something is AI?

If everything has sparkles, then nothing feels important. And if something really needs to be double-checked—like a payroll recommendation, or a customer invoice warning—it should stand out.

Here’s an example: QuickBooks suggests a small tweak to a zip code. Should that be labeled as “AI”? Maybe not. But if the system recommends that reminding a client of an overdue invoice should get a stern message, that deserves a moment of pause. A little friction can be a good thing.

Branding should follow trust—not the other way around.

AI Has Bias, Too

We also need to stay honest about bias. If your AI was trained on one type of user, it may not perform well for others. That’s access bias—when what we build is shaped by what we have easy access to. Just think of how search results shape our worldview. Now imagine what happens when those same patterns are baked into AI models that make decisions on our behalf.

Inclusion has to be part of the training process. Otherwise, we just recreate the same old barriers with shinier tools.

What’s Next?

We’re just at the beginning of what AI can do to support accessibility. From indoor navigation to detecting illness by voice, we’re seeing real breakthroughs. But the more we rely on AI, the more we have to build systems that are transparent, understandable, and safe.

As designers, developers, researchers, and product managers, we have a responsibility to ask tough questions:

- Are we adding delight or just showing off?

- Are we giving people enough information to trust us?

- Are we adding just enough friction to protect people from harm?

It’s not about being perfect—it’s about being thoughtful. And that’s what inclusive AI is really about.

[This article is based on my presentation to kick off a series of Inclusive AI sessions at Intuit. It was written with AI assistance.]