This is a restored article from a workshop I attended in 2018. I still incorporate elements I learned from this day. Some links have been lost in time, these link to archived versions on Internet Archive.

The problems with web permissions – W3C User consent and permissions working group

- Jo Franchetti

- developer advocate at Samsung

- @thisisjofrank (now on Mastodon)

- A Crisis of Permissions

Much of the value of the internet is based on user trust of the internet. As this trust is broken, the internet is devalued.

The overload of permission requests is causing fatigue and reducing the trust. People are becoming fatigued to cookie reminders and popups and leading to blindly clicking on acceptance.

Loading a permissions request as soon as the page loads doesn’t allow the user to trust the site, what does it represent, and why they should allow the service.

Confusing wording, especially for nefarious purposes

“uncheck here to continue to not receive notifications”

Bundled, implied, inherited permissions. You accept a master permission request without knowing it has a set of sub-permissions.

Blocking content until permissions are granted is keeping users from gaining trust and understanding the value of the site. Tumbler has a full page takeover

Conversation points

(audience includes browser manufacturers, publishers, academia, and W3C leaders)

- What is required by law? some of the complexity is part of the legal requirements

- Disagree with sharing data, data still shared

- Context – what is actually inferred in the data sharing, for instance geolocation providing home location

- Can consent be withdrawn easily

- Are users really informed, too complex

- Permissions process should also follow WCAG accessibility guidelines

- Trust is gone, people already assume the system is corrupt.

- Signal/noise – confuse user into consent

- Understanding a company’s use of data is overwhelming from the inside, due to fragmentation in development process. So how do you legitimately declare your data usage?

- Sensors that don’t require permissions can be used for fingerprinting. what should we be prompting for and are there any backwards permissions that need to be adopted.

- Users throw up their hands. with so many permissions and sensors being used, people may give up from frustration. fatigue or fatalism

- Do not track included transparent information, such as privacy policy location, opt out process, etc.

- Should settings for what you give consent be in the browser or in the web site.

- Readable language may make it easier to read, but it doesn’t mean that it is providing a full grasp of what the permissions are allowing, such as cameras accessing private details that go beyond a selfie or light/color detection (expected use)

- Problem is with premise: we need to obtain permission to do these things. Do we need to have them at all? Should browsers be sharing this in the first place? Apple’s storage access API turns off the function. someone that allows this is open to abuse. So, the prompt is at the device level, not the web. What features do we believe the web platform needs? Should there be a baseline for what browsers do not share.

- We can build an API that restricts access, but people could realize they didn’t ask for permission, so they use a polyfill that uses a previously consented API to pull in additional information. Such as using the camera app to determine location or sound.

- Good example: input type file, drag and drop… these are implicitly given permission via the platform.

- The browser should be handling the permissions model.

- There are three actors:

- The perfect actor

- The absolute devil

- Everyone else. People that provide a useful service, but also want as much data as possible to earn a profit. It’s not that they want as much data, they may be forced to get that much data within the advertising industry. Publishers would prefer browsers stop this.

- Advertising is the center of most data abuse. Advertising industry is requiring this data and forcing sites to abuse the process. Brave is an example of a browser that is not allowing third party data exchange.

- The internet has grown quickly via organized chaos. Business models are built on this, not all is for abusing the system. But we shouldn’t allow this to prevent the next generation of applications.

- should the web give access to sensors and devices? in the term of IoT? How do we allow the web of things while providing privacy, such as discovering other devices? Some devices may not have a display and permissions are asked via secondary interfaces.

- There’s still a difficulty of understanding the downstream information from a single page

- Who owns this problem?

- web platform

- publisher

- government/laws.

- All of these move at different speeds

- The web is inherently casual. There is value in having a distinction between applications and the web. The act of installing software was important. This doesn’t exist in visiting a web site.

To whom do sites answer?

- W3C Permissions Workshop

- September 2018

- Martin Thomson

Event ownership

in the early days

Loading a site

- Condition: visit site, click link

- Accountability towards user: loading spinner, status bar

- Redress: stop button

Rendering a page

- Condition: visit a site

- Accountability towards user: site is visible

- Redress: Close the window

Javascript

- Condition: visit site

- Accountability towards user: slow script dialog (?), OS(?)

- Redress: close window

Can visiting a site and staying on the page provide a consent to run javascript and third-party cookie/data? What is the redress? Is it leaving the page?

window.open

- Condition: none>engagement gesture

- Accountability towards user: a new window is visible

- caveat: popunders

- Redress: close the window

<input type=file>

- Condition: selection of file to upload

- Accountability towards user: none

- Redress: none

Geolocation

- Condition: First real attempt to gain permission for access to sensitive information. First API to use the door hanger

- Accountability towards user: varies

- Redress: none

How do you make the person that owns this information accountable to the person providing the information?

How does right to be forgotten come into effect.

Once most sensors send data, the data is not possible to retract. After giving permission for geolocation, there’s not the ability to define the data usage post-approval.

getUserMedia

- Condition: doorhanger

- Accountability towards user: on-screen indicators, camera light

- Redress: varies

Mozilla doorhanger

Notifications

- Condition: doorhanger

- Accountability towards user: popup message

- Redress: action menu on notification, or byzantine mess of hard-to-find, browser-specific menus

Push

- Condition: (see Notifications)

- Accountability towards user: (see Notifications)

- Redress: (see Notifications)

For Discussion

- Browsers often infer permission

- Design language and expectations both evolve

- Calling sites to account requires more than tasing the problem on to users.

- Crawling the web, looking for bad behaviors, to determine consequences.

Why would content blocking not be considered the same as malware detection.

Malware detection is a backwards strategy. Wait until something bad has happened and then stop additional bad behavior from happening. It’s better to set the foundation to prevent bad behavior from happening.

Detecting malbehavior is a strategy, but it’s better to have prevented it beforehand.

Some exploits cannot be detected until they are abused. People are good at detecting potential exploits within API functionality.

85% of zero-day attacks are based on unsafe memory. These could be avoided if this was a better foundation.

WebRTC used to detect users’ IP Address. It required a sequence of actions and finding a non-standard path. But WebRTC allowed IP Address, the mal-behavior process needs to parse the valid use to find the invalid use.

- It’s important to incorporate privacy into the initial specifications. Otherwise it is going to require patching.

Trackers and malicious actors are why we cannot have good things. - A low proportion of people are enough to create a significant conflict.

- If you are recording in a two party state, consent is required by law. For instance getting consent to record a Skype session.

Need for an accountability pattern on the web.

Engagement is different than a gesture, clicking or typing. Engagement could be a site I have regular interactions with and browser infers certain permissions should be granted.

Permission Persistence

W3C User Consent and Permissions Working Group

September 26, 2018

How do browsers deal with persistent permissions.

- The patterns are not consistent.

- Some browsers will remember your “yes” forever.

- Others will make that last until you close the browser, others until you navigate away from the page.

- This is an inconsistent expectation for the user.

Notifications is a good example.

The permission action and consequences are disconnected.

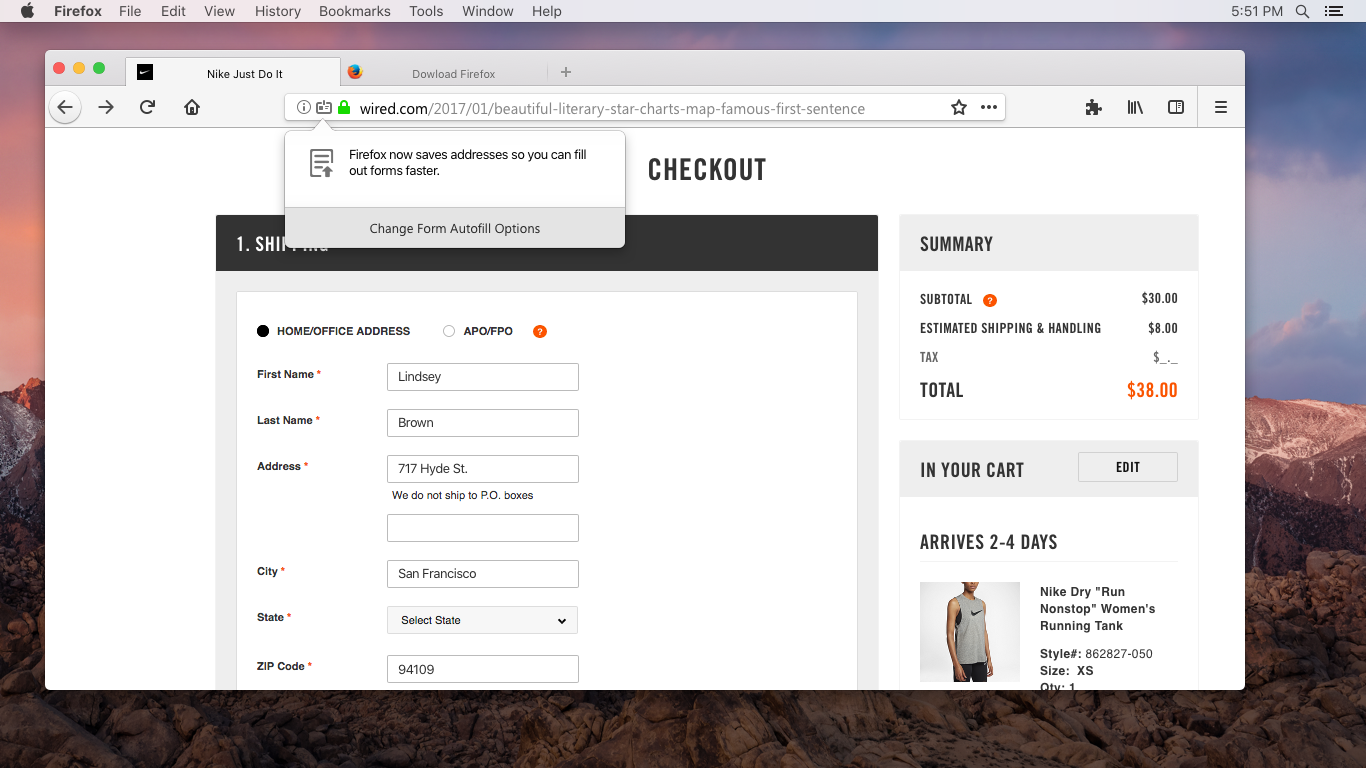

Firefox: show the prompt to ask for permissions. We see this a lot. That is separate from the persistence issue.

Lean on the operating system. In many times the operating system has mechanisms to store this information. For instance a permission may be set on Android, this should be available to the web application.

There was a proposal to simplify and standardize the chrome around applications. Signaling language and pattern.

cookies have an expiration policy, but this can be abused by using the maximum mount of time… 15+ years.

sharing information in accordance with law (2 options):

- We meet legal obligations and practices

- We do whatever we want until the law bans it.

Users may not understand the purpose of providing permission until after a short time. For instance, notification may be good immediately, but it could be problematic when notifications are too common and interrupt a work process.

Browsers should learn a user’s preference and immediately begin blocking the preference For instance a person continually blocks geolocation. The browser should then make it no by default.

The Immersive Web

Nell Walliczek

Principal Engineer Amazon

Virtual Reality

Richie’s plank (Internet Archive) is an example of VR with powerful impact. The user must walk on a virtual plank above the city.

Categories:

- Tethered (oculus rift) use computer or gaming console for processing.

- mobile vr (holds a phone and the phone does the processing)

- standalone vr – all in one, can have full 6 degrees of freedom and track movement

AR

- Headset AR – hololens, oculus rift

- handheld ar – mobile device with AR

XR

the umbrella for AR and VR

Environment awareness

- hit testing – you can shoot a virtual array and figure out where it intersects with real world.

- anchoring

- point clouds

- eshes

- lighting

- semantic labeling

User awareness

- location in 3d space

- input sources

- eye tracking

- facial expressions

Immersive web working group

webxr API specifications are being finalized

There’s a repo for security and privacy concerns

What is webxr

imperative API

provides access to input an output capabilities common associated with VR and AR.

With it you can

- detect available devices

- query device capapbilitys

- poll for position

User consent and privacy

- fingerprinting during bootstrapping

- real world geometry

- camera access and perception of camera access

- object or image identification

- permissions

fingerprinting

- sites need to have a button for entering vr and ar

- some experiences require specific hardware behavior

- multiple devices may be connected

- spinning up the fun hardware just to reject isn’t a good experience

proposed mitigation

- supports checks only for vr vs. ar

- inline vs. explosive data restrictions

- user action required to enter exclusive

- consent requested

- actual hardware bootstrapping happens as a last step

real world geometry

- room geometry may be used to identify when two sessions are occurring in the same space

- RWG may indicate a specific location

- inferred location history

- estimating the size of the suer’s house, to estimate user income

- facial geometry

- gait analysis

- credit card # geometry

Roomba is mapping room geography and possibly sharing with third parties: Your Roomba May Be Mapping Your Home, Collecting Data That Could Be Shared

Camera access and perception thereof

Issues

- See-thru vs. pass-thru (see through like leapfrog where the glass is clear and image applied to glass)

- Can users reason about the difference

- Is there a meaningful difference if RWG is available

- Polyfills will blur the line

Object and image identification

issues

- registered images objects can be anything

- can be used to profile users, identifyth the presents of specific brands of tv

- can be used to blackmail users

Permissions

Issues

- Bundling XR Permissions

- Bundling with non-XR permissions

- Upfront vs. just in time

- Duration

Some things people consider features, such as a global vr cloud for people to share, may be considered a privacy violation from others.

XR experiences can be so physically powerful that they create virtual memories and emotional impact. Is the user consenting to this impact?

How do you adequately express the extent of sharing people are providing when engaging in AR? It’s a wall of text but is there a way to simplify and still express the complete picture.

Permissions in the XR space

- worry about fingerprinting concerns

- session setups to not fail out if permissions are not available

- mental models – make sure there are consistencies

- immersive/exclusive mode

Two modes:

- exclusive mode: with permission progressive enhancement.

- embed 3d experience in line in a page, just like canvas. when you click a button that says use my harder, that it opens like a full screen that is immersive.

Our permissions mode is based on immersive experience, not the embedded/inline mode.

Consider bundled permissions at the immersive experience.

AR lite mode: allow browser to do automatic image stitching while still allowing hit casting

web RTC needed to determine if a person could enter a video chat by providing an evaluation of hardware. For instance, don’t prompt video conference if there is no camera.

Constrainable pattern, section 11.

An ordered list of constraints when requesting a media stream.

Determine camera, data rate, resolution, etc.

Platform permissions handling

Representatives

- Apple

- Brave

- Chrome web platform

- Mozilla

what should be left to browsers vs app

- tie permission to user event

- what is value of asset and permission

from an immersive web perspective, permissions take on a new dimension. Because immersion involves a new level of physicality, the permission prompt can impact the user experience, and pull the user out of the event.

The user could be in an uncomfortable virtual situation, i.e. about to go down a roller coaster drop. They may immediately accept it to reduce the anxiety of the situation.

It’s difficult to confirm informed consent. Browser should be agent and steward. Users should be presented with consent requests when they need to make a decision.

Are we asking users enough questions? we review some new API and find there are some fingerprinting risks. Should we push this to the user?

Some APIs are data (geolocation) some are functions (notifications) and some are a mix (camera, microphone). It would be good to have a grid that provides user with better understanding of when they are giving access to functionality and data.

Permissions, Policy, and Regulation – Consumer Reports Digital Standard

Consumer Reports began evaluating permissions when consumer products began sharing data

The Digital Standard

- Security

- Privacy

- Ownership

- Governance

- Consumer Reports Launches Digital Standard to Safeguard Consumers’ Security and Privacy in Complex Marketplace

- The Digital Standard (Internet Archive)

The Standard

Below is the current version of the Digital Standard. You can click on the Test Name to view and comment on a section on Github. There you can propose a change, run a test and report results, or start a conversation about how to tackle one of the open questions. Please note that you will need to be logged into your GitHub account to see the GitHub pages.

Each criteria has been color-coded to help direct your contribution and participation.

- Well understood with a developed testing approach in place.

- Under development with some outstanding questions.

- Under discussion, usually due to the sensitivity and complexity of the issue.

Minimization: only important information is collected

Automatic Content Recognition: take snapshots and send it to the cloud for content recognition. It was challenged by the FCC and now it needs to be declared.

Glow was a product that failed their privacy standards and was exposed for it’s lack of data stewardship

CR now provides easy to understand support grids.

Dark Patterns (Internet Archive) twitter account for sharing screen shots of bad examples

Better ads standard

Better Ads Standard seeks to define what could be the best experience and set standards around that.

Self-plagiarization : consent in law and code

Previous Standards Bodies: in the W3C

California AB 375

California Consumer Privacy Act

It was created by Alastair Mactaggart

polling at 80% support

minimal support for initiative from privacy orgs

Replaced the ballot measure with a bill

weaker on enfacement yet broader on what is covered

still little privacy org support

law comes into force in 2020

next fights

- clean up bill in ca

- federal preemption

California consumer privacy act

Whom does the law apply? any company world-wide with one or more:

- annual gross revenues per $25 million

- holds personal info on 50,000 or more people/households/devices

- 50% or more of annual revenue from selling consumers’ personal info

Jurisdiction is based on the consumer in California

New rights

- know what info is being collected

- know whether their info is sold or disclosed

- say no to the sale of personal info

- access their personal info

- equal service and price

- deleting data and data portability

- over 16? right to opt out. under 16? right to opt in

An empirical analysis of web site data deletion and opt out choices

Hanna Habib:

PhD student in the Societal Computing program at Carnegie Mellon University

Online privacy choices

- Data deletion choices

- email communication opt outs

- targeted ad opt outs

GDPR requires privacy choices

- explicit consent

- withdraw consent at any time

- request deletion of personal info

- emphasis on usability

- intelligible language

Designing a new permissions model

- determine where web sites are offering privacy choices

- evaluate wither users can realistically exercise these choices

- identify best practices

Analyzed 25 web sites from alexa top 50

Recorded:

- location of privacy choices

- shortest path to privacy choice

- description in privacy policy

Requirements

- standardize terminology

- standardize functionality

- standardized placement

Ideal permissions model?

- descriptive and user-friendly terminology

- one-click permissions

- centralized location for permissions

Contextual permission models for better privacy protection

Primal Wijesekera

primal@cs.berkeley.edu

Custom permission model built for Android

A permission model that only allows access when the user expects it.

Privacy violations occur when sensitive info is use in way defying user’s expectations

Helen Nissenbaum, privacy as context al integrity – reference

Ask on (first use)

prompt on first use of the app.

lack of context

for instance, Uber app asking for location.

Experience sample

Uber has accessed your location. Given a choice, would you have allowed or denied this access? yes/no

80% of participants would block at least one permission request

android permissions demystified: a field study on contextual integrity 2015 – research

the feasibility of dynamically granted permissions: aligning mobile privacy with user preferences

Contextual factors

Allow Uber to access this device’s location

visibility, foreground app

how often users should be prompted

- 4 exposes per minute/user (2014)

- 6 exposes per minute/use (2016)

contextual cues helped

| Time | Error Rate | Average Prompts |

|---|---|---|

| Ask on first use | 15.4% | 12 |

| ML Model | 3.2% | 12 |

| ML Model (lower prompt count) | 7.4% | 8 |

Fewer privacy decisions for higher privacy protection when using ML to generalize when there should be a request.

Permissions Bundling

A way to combat permission fatigue?

The permission prompt problem:

This page would like to

- do popups

- play sounds

- use microphone, camera

- know you rlocation

- gather network information

- avoid being suspended when in the background

- a dozen other permissions you wouldn’t think about

This is a basic mobile phone (app) – Skype

Maximal bundle version

I’m a video phone app. can you allow me to do phone-like things?

The consent model – The parties

- The user (good by definition)

- the platform (good because all is list if it isn’t)

- the application (good, bad, or ugly)

Ways to mediate

- Standardize a number of roles, with associated capabilities – let app ask

- Let the app ask for a bunch o permission, browser guesses what user wants

- App asks for permissions, user grades trust in page, browser implies permissions